The AI revolution is in full swing. Modern artificial intelligence methods for learning, reasoning, planning, and optimizing are changing the rules of many industries, from advertising and social media to mining or manufacturing. But not video games. At least, not yet.

This may sound strange to many. Don’t all video games have AI that controls the characters in the game? Well, not really. The kind of AI that controls the non-player characters (NPCs) in most games is more like an intricate set of rules or a mechanism, manually created by the designers of the game. However, in recent years the video game industry has woken up to the revolution happening in so many other industries. Major game developers now have AI research divisions, and several game AI startups exist, including modl.ai, a company I co-founded. The groundwork has also been done by almost two decades of research on AI for video games in academia. In what follows, we will generally start from technology that already exists in games and then look at new ideas that have been explored in research but have not made it to game development yet.

Alena Darmel

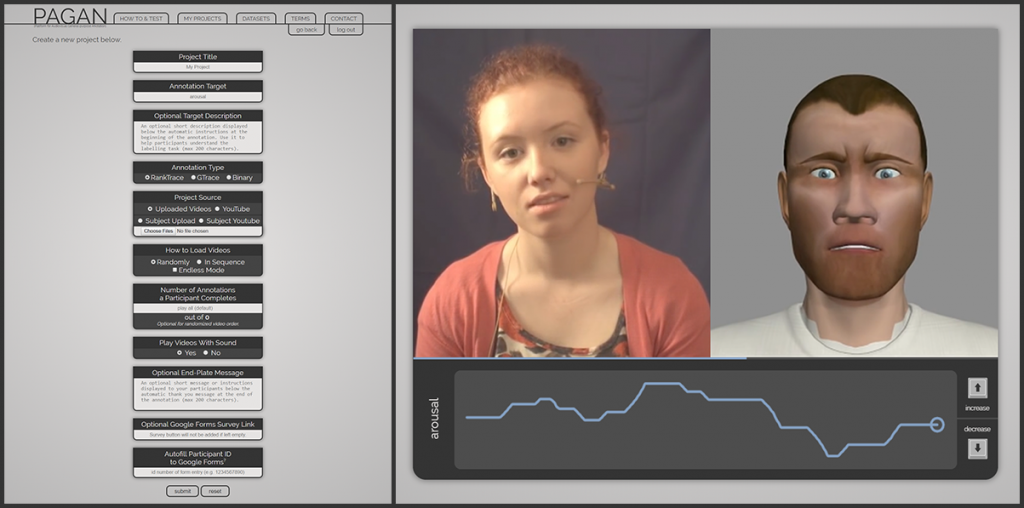

Let us start with one area where modern machine learning is already increasingly being used in games: understanding players. Most games, at least those with any significant production budget, frequently “phone home”: they send data to their developer’s servers about the player. This data can be sparse, such as only when each play session starts and when it stops, or much more detailed, including all the actions the player took. Machine learning methods are then used on this data to learn all manners of things about the players. In particular, it’s used to predict what the player will do. The most important question for many developers is when the player will stop playing, which players, and under which circumstances will pay money for boosts and in-game items. This question is obviously of great interest to game developers because it’s tied directly to the bottom line.

But the use of machine learning to model players is yet in its infancy. Research has suggested many more things can be modeled and used for such models. For example, we can learn to predict what players will experience based on how they play and what content they experience. For example, such models can predict whether a player with a particular playstyle will think a particular kind of level is fun to play, or a particular character will be intriguing to interact with. We could also train models that predict at a more granular level what the player is going to do, such as which path they will choose at a fork in the road, or which strategy the player will choose when meeting an important challenge such as a boss fight. Now, there usually isn’t enough information in a single gameplay session to learn a good model of a player, but the data can be combined with what has been learned about other players by clustering player types. It is also possible to learn models of a player across multiple games, something which should specifically benefit platform holders and publishers with multiple games.

These models can be used for many purposes. Many games, racing games and other sports games in particular, already perform a simple form of adaptation to the player under the name dynamic difficulty adjustment. With better player models, we will be able to do much more. We could select the content that matches not just a player’s skill level – and consider that skill is not one-dimensional, some players might be good at strategy and others may have quick reactions or a good understanding of characters’ emotions – but we could also select the content that we know the players will like.

So, for example, a game could choose to give a player a new level to play that it thinks will offer the player a good but not overwhelming challenge and that it thinks the player will enjoy. Or it might let the player encounter non-player characters with personalities and stories it thinks the player will find intriguing. With enough data and good enough machine learning methods, we will be able to predict not only what a player will enjoy right now, but what the player will enjoy in the future, based on how other players’ tastes and capabilities have evolved as they play. So the game will be able to decide when to introduce new types of quests and dilemmas or new visual environments, so the player won’t get stuck in a filter bubble of game content.

These model-driven adaptation mechanisms could simply select which content to serve the player from among sets of levels, characters, quests, etc created by the designers of the game. But this kind of adaptation gets more powerful if the game can automatically create new content that fits the player. In other words, if we can combine player modeling with procedural content generation (PCG). Let’s look at how AI will advance PCG next.

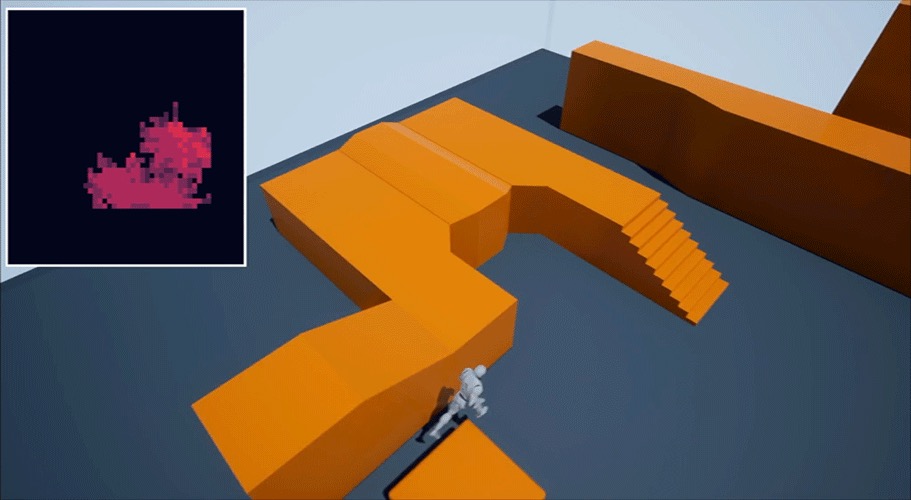

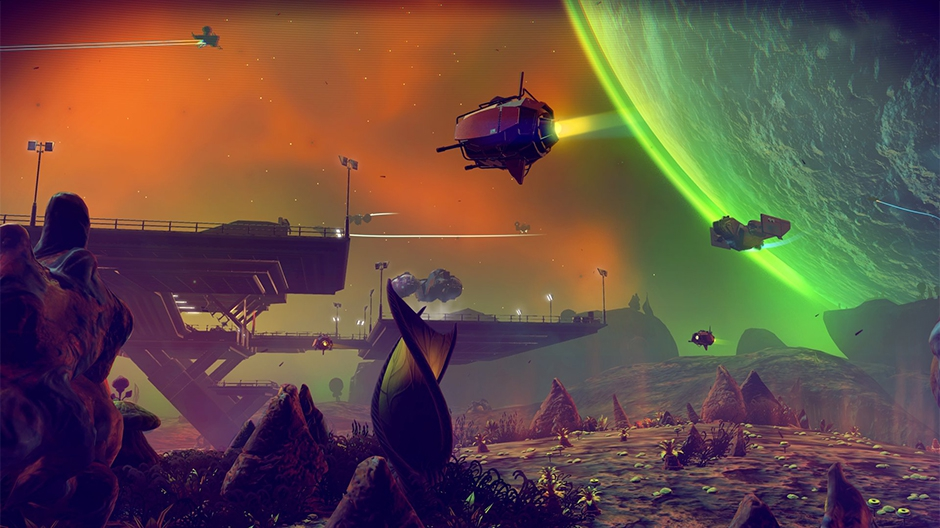

PCG has been part of many games since the early eighties. Lately, it has seen prominent use not only in roguelikes like Diablo and Hades, where levels are generated, but also in strategy games such as the Civilization series, where whole worlds are generated, and exploration games such as No Man’s Sky, where planets are generated with unique flora and fauna. Some forms of content generation are also used for specific tasks in a large variety of 3D games, for example for creating all those trees and bushes – a repetitive task you don’t want to waste game artists’ time on. The PCG algorithms that make these games possible rely heavily on randomness, and it’s hard to make them do what you want; as a result, there are many limits to what you can do with PCG right now. However, a string of AI innovations has brought us new PCG methods that bring radical new possibilities for generating game content.

Some of the new PCG methods see generation as search in the space of possible contents. So, for example, it could create a new level by searching for one which has a specific level of challenge. Combining this method with models of what players do and what they enjoy, we could automatically generate completely new content for particular players. Other methods build on machine learning, where generators are trained on existing content to find the patterns they have in common and use those to produce new content. For example, a generator could be trained on a set of existing in-game characters, both their visual styles, speech patterns, and behavior, to generate new characters that are in some ways like the existing characters. So we could imagine artists and designers only making some of the content for a game, and letting the algorithms produce more content in the same style. It will also be possible to pre-train generators on the content from existing games so that they can quickly learn to generate good content for new games. A third approach to generating the content, a very new one, is to use a trial-and-error procedure to create content-generating agents. These agents might be working in tandem with human creators to create new game content, combining the creative strengths of humans and machines.

With all these new AI methods for content generation, what can be generated? Potentially anything, from textures to quests to game rules and concepts. AI will not replace human game designers and artists, but instead give them superpowers: a level designer will be able to draw up the general shape of a level and place key items, and have the rest of it auto-generated; they will then be able to adjust the generated level using high-level instructions like “make it easier for players with slow reflexes” or “make it less symmetric”. In some cases, the PCG methods will function more like inspiration for the designer. For example, a designer could ask the algorithms to create a new set of coherent game rules based on a sketch of a game mechanic, and then take those rules and refine them further manually.

At the other end of the automation spectrum, some of these methods can be integrated into games as they are running to create new, personalized experiences for players. You can imagine a player going exploring in an open-world game, and coming upon a new town. This town was not made by the designers of the game but created by the game in response to the actions of the player. The game has observed what the player has done in its world so far, and also combined this with information about what they have done in other games and its knowledge about what other players have done in its world. Based on this, the game creates a town that it thinks will give this particular player new and interesting experiences. This might include the architectural style of the town, the color scheme and textures, the personalities of people who live there, the vehicles and items that you can find as a player, and the quests you may attempt there. Such a game would be truly open-ended and unique to every player.

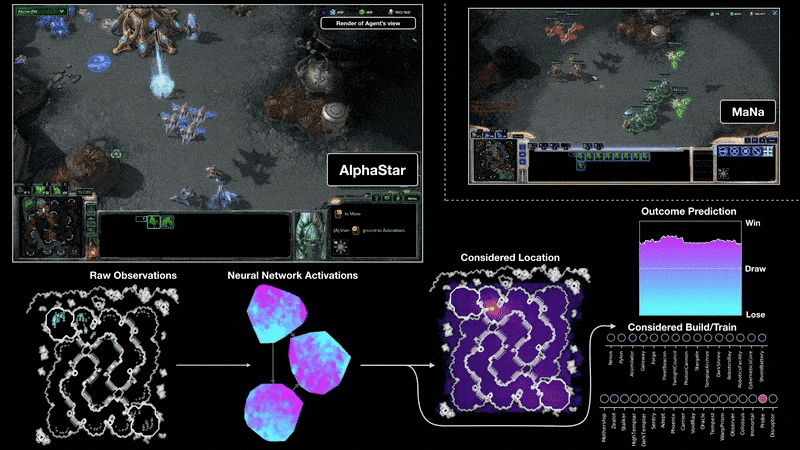

In the last decade, enormous research efforts have gone into creating AI players that can play games. From board games like Chess and Go to classic Atari games to FPSes like DOOM and Counter-Strike, we’ve seen some very impressive work on building or training well-playing agents. This work, often carried out by large and well-funded teams at major tech companies, mostly serves to develop and test new AI methods. These agents, in themselves, are not very relevant to game development. Most games are not in need of better-performing agents as opponents, as it is easy to create challenges for players in other ways. (There are some exceptions to this, such as complex strategy games, where better-performing agents are desirable.) However, there are other uses for game-playing agents.

One of the most promising applications for AI agents that play games is game testing. Like all software and other technical systems, games need to be not only developed, but also tested extensively. Games are tested to ensure that they don’t crash and there are no graphical glitches, frame rate drops, or other visual issues, but also that levels are completable, there are no hidden shortcuts, and that the game is balanced. Among other things. Testing is very labor-intensive and consumes a sizable chunk of most game development budgets. If a game is not tested enough and has bugs upon release, this can lead to scathing reviews. Therefore, methods that can automate game testing, partially or completely, are immediately relevant for modern game productions.

Agents that play a game well can be very useful for game testing, as they can test whether a game or part of it, can be won. But we can do many more types of testing if we have a wider repertoire of agents. Agents that play in a human-like manner, or like a specific type of human player, can test what would happen if a part of the game is played in a particular style. Such agents can be built using models trained on human playtraces, as discussed previously. Other agents can be configured to explore, so as to find as many paths through the game as possible. This can help the game’s developers find unexpected situations and strategies in the game.

In addition to testing, there are many potential uses of agents that can play games, in particular, if they have been trained to play in the style of human players. Game balancing is one. By testing a game with many different AI-driven agents, we can see how it would be played by different types of players, and adjust accordingly. For example, we may want a role-playing game to be only moderately more difficult for players that rush through it than for completionists who meticulously scour every area while being much harder for trigger-happy players than for those who focus on diplomacy. We can let an algorithm automatically play it through with different playing styles and gradually adjust the parameters of the game to achieve the desired balance. The advantages of this approach only become greater in multiplayer games: the challenge of balancing a Battle Royale game with a hundred people on the same map manually is immense, starting with that you need a hundred committed humans who understand the game every time you want to test a design change.

One way game-playing AI can be used to improve NPCs is by providing better sidekicks or team members. It is commonly attested to just how useless NPCs that are supposed to help you are, as opposed to those that are supposed to be thwarting your progress. Players often complain about sidekicks standing in the way or otherwise obstructing gameplay. With better player modeling, we can create AI agents that can predict with some accuracy what the player will do, and plan around that to carry out their goals.

Speaking of NPCs, there will also be a revolution in the use of natural language by game characters. Almost universally, game characters “speak” in pre-written sentences, and interaction usually entails following a “dialogue tree” where the player can choose from a very limited set of things to say. This is a very stifling form of interaction, and it was never anyone’s idea about how best to interact with game characters. It’s more of a workaround, which has become the default over the past four decades of video games because we don’t know how to actually make game characters talk. The massive progress in deep learning-based language models promises a way of ultimately getting around this. By giving language models the right prompts, it is now possible to generate (written) interactive dialogue on particular topics and in particular styles. But it is still very hard to control the output of language models, and NPCs built this way may end up saying things that interfere with the game’s design or worse. But with the pace of progress in research on tuning and controlling language models, it’s likely that we will see deep learning-powered NPC dialogue in the not too distant future.

So far, we have not touched upon the aspect of video games that has since the medium came into existence being the most salient aspect of technical progress: graphics. AI is usually associated with behavior, which might make one wonder how these techniques would apply to graphics. However, deep learning has made inroads along with various parts of the graphics pipeline. This includes animation, where traditional motion capture can now be augmented by neural network-driven procedural animation, and the generation of lifelike faces and textures. It is also possible to train neural networks to act as a filter on the complete graphics output so that it can be transformed into a particular style; for example, a clean-looking render may be transformed into a grittier, more naturalistic scene. We may in the future see the game engine’s rendering engine doing less work by only producing a rough render, which is then translated into the desired visual style by a deep learning filter.

We have here described a wide range of AI inventions that will impact video games. Some of these are already in production, most are at the stage of advanced research prototypes that have not yet been integrated into released games, and some are in early research stages. Perhaps the lowest-hanging fruit is game testing via bots and certain forms of procedural content generation, whereas NPC dialogue via language models is at the stage where we have not gotten it working reliably in the lab yet. However, it would be a mistake to only think of these methods in isolation. Most of them can augment and even rely on each other. For example, better PCG will make it possible to train more diverse and generally capable game-playing agents, and these agents will in turn make it easier to test games better and to develop better content generation methods (which partly rely on testing the content to guide generation). Better data from players will enable better player modeling, which will enable us to create more human-like playing agents; but better playing agents will also improve our models of human players, as there are more trajectories to compare to. All, or at least most, parts of this machine feed each other. Thus, there are significant advantages to maintaining active R&D across this whole stack, if one plans on advancing the state of the art in AI for games.

Now, you may wonder, why care about games? Aren’t they quite insignificant compared to the real world? The modern world begs to differ. Video games are key drivers of technical progress and an ever-growing industry. For many millennials, video games are the most important medium of expression and consumption, and games are poised to eclipse traditional broadcast media as new generations grow up native to gameful interaction. Video games are used to teach, train, inform, and persuade. They are also used as testbeds and training environments for the AI systems that will run the world of tomorrow, from self-driving cars to management systems. Indeed, there is a sense in which video games might become so ubiquitous that we cease to identify them as a separate medium. The Metaverse is forecast to be the next generation of digital presence and interaction; interconnected, persistent, multi-person worlds where we can carry out a wide array of activities and conduct much of our daily lives. In other words, the Metaverse is the spiritual successor to today’s multiplayer online games, building on the technology that has been developed for video games. The technology we build for video games today will underpin the Metaverse of the future. Video games are perhaps the most important domain to develop artificial intelligence for.