Making a videogame is never an easy task, and what doesn’t help are all the bugs we encounter along the way. Sometimes a mechanic that worked just fine yesterday is no longer behaving as it should. Or two features have been introduced to the game that don’t play nicely with each other. The more of a game you have built, the more bugs are going to creep in there. One way we can begin to tackle this is through the use of automated testing: a process where we run tests automatically on our games. Meanwhile here at modl.ai, we’re taking this one step further, with AI testers that can become part of the automated test suite to better improve the quality of our games. But what benefits does all of this bring? Here are our top five reasons why developers should be migrating towards automated and AI testing:

1. Test the game with AI that plays like human

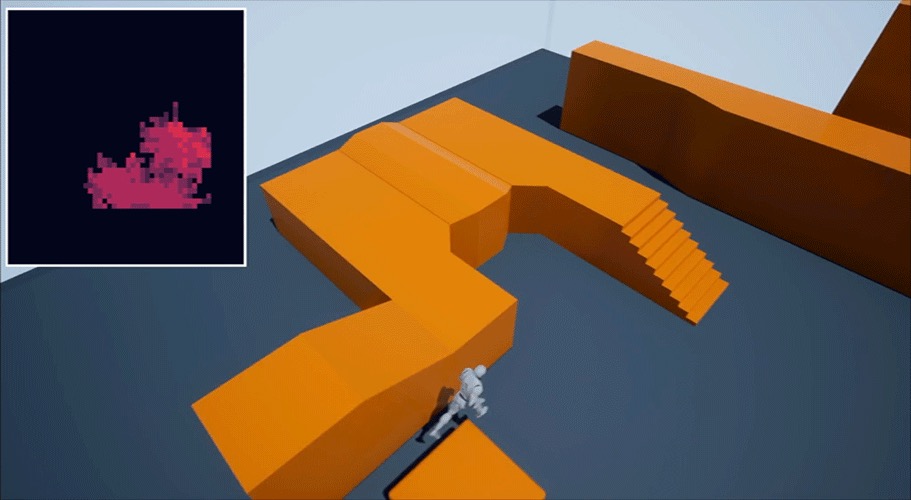

Testing is often a slow and difficult process, with quality assurance teams needing to examine a myriad of possible situations that could result in bugs or faults in the game. Every gameplay mechanic needs to be put through its paces: trying it in a variety of different situations. What if two mechanics can work together? Like jumping in the air and attacking the player at the same time? We need to not only test them individually but also test every combination to see if the two play nice with each other. This requires testers to sit and experiment with those mechanics in the game, in scenarios players would expect. Through AI, we can now tell these systems to explore the world and try testing mechanics in-game: remove the need to manually identify every possible testing scenario, and instead encourage the AI to actively seek out the bugs we’re looking to find. This can help prevent many bugs that players often find after release, from ever getting past developers. To see how this works in practice, check out this video from AI and Games in which the developers of ‘Tom Clancy’s The Division 2’, Massive Entertainment, introduced AI testers that play their online game on a daily basis in search of bugs.

2. Testing faster than ever before

Even the best quality assurance teams are limited by time. If we need to test jumping in every level, on every platform, and possibly from a myriad of angles, that’s going to take a lot of time and people power to achieve. Plus with every new feature or level, the number of unique testing scenarios increases, and the risk that something will be missed begins to grow. By using automated testing we can speed up this process drastically by removing the need for human quality assurance teams to focus on repetitive tasks, plus we can speed up the games execution time or run it on a testing server remotely, and allow AI testers to complete hundreds, if not thousands of tests in a fraction of the time it would take a regular person.

3. Checking for new bugs even faster

Once a bug is squashed, then the hard part is to make sure it stays squashed! Sometimes a bug can come back, and there’s no guarantee that it returns for the same reasons. Typically that means a tester would need to revisit this issue again, and again, and again. But dedicating time to check if old bugs are occurring once more is incredibly time-consuming. Plus it gets in the new way of searching for new bugs, taking up more time and resources. So why not automate it? We know what this bug looks like, so teach the system to keep an eye out for it in case it crops up again!

4. Build a library of tests to keep your game clean

When a new feature is being built, it’s also useful to think about the tests you can use to check that it works as intended. Over time, your library of tests can continue to build and cover many different facets of your game design. By combining code-based automated tests with AI testers, you can merge the benefits of running checks to ensure the code runs as is intended, but also having AI testers continue to explore the game in ways that a human player would. To learn more about how to build and balance your automated tests, check out Rob Masella’s GDC presentation on the test-driven development on Rare’s Sea of Thieves. A wonderful introduction to how to integrate automated tests into large scale productions.

5. All QA teams to focus on quality

Now while we’re advocating for AI testing to help speed up and improve the game production process, this should not be at the expense of people. As discussed already, quality assurance teams are already a fundamental and intrinsic part of game development. But while we’re confident in our AI testing solutions, we’re also aware of the things they can’t do. An AI tester can’t spot graphical glitches like badly configured textures. It can spot where a bug occurred and the log of how it happened, but it doesn’t understand how that error would come to be. It can’t qualitatively evaluate how fun a mechanic is, or whether a level plays like it should. These are uniquely human traits, so it’s important that any AI testing process is built to keep humans in the loop: ensuring they get final say on the bugs an AI finds, that they get to focus on bringing the quality out in any game being designed. Games are continuing to grow in scale and complexity, and while this will lead to some exciting stuff to play, it also means a lot more bugs that need to be clamped down. The next step for improving quality assurance is a blended approach of automated and human testing: with AI solutions handling the bulk of the workload, while testing teams figure out where best to put that resource to use, and apply their expertise to ensure each game that hits the market is as good as it possibly can be. Use of AI testing is a growing venture happening across the games industry. Check out this talk from Electronic Arts in 2021 that details their efforts in building AI testing tools for their own internal projects.

With modl:test, we’re putting out expertise to practice by providing broader access to tools that are becoming more commonplace. Now is a great time to start thinking about how AI testing could change your game productions for the better.