The 1985 version of Super Mario Bros. has a glitch that enables you to get stuck in an infinite loop in the, now legendary, Minus World.

Testing a video game for bugs and glitches can be tiresome and repetitive — not unlike being stuck in an infinite loop like our friend Mario.

Recent progress in AI offers new ways to escape the QA-testing loop. Many of the developers we speak with are surprised to learn just how powerful automated testing in video games has become. For both repetitive tests and exploratory testing, automated test bots have an edge compared to their hard-working human counterparts.

Automation of repetitive tasks

Let’s say that you encounter a bug during what was supposed to be a simple playtest. You now have to figure out what the problem is and create a bug report. A short and simple task became time-consuming and complicated. Why? During game development, we push new content often. We then play through the level we\re working on to make sure we didn\t break anything. But what if our change caused a bug somewhere else?

Using AI trained bots for regression testing, we can receive a warning if our changes break the game. That way, we free time for developers and QA to perform other tasks.

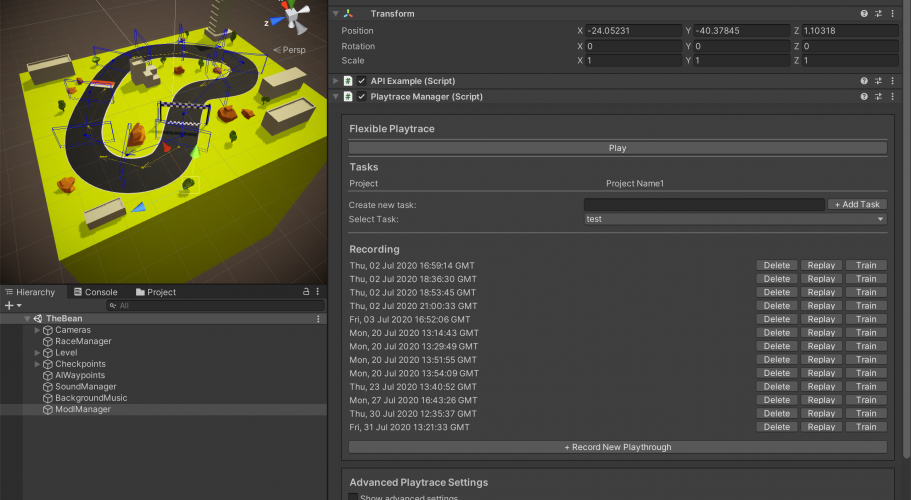

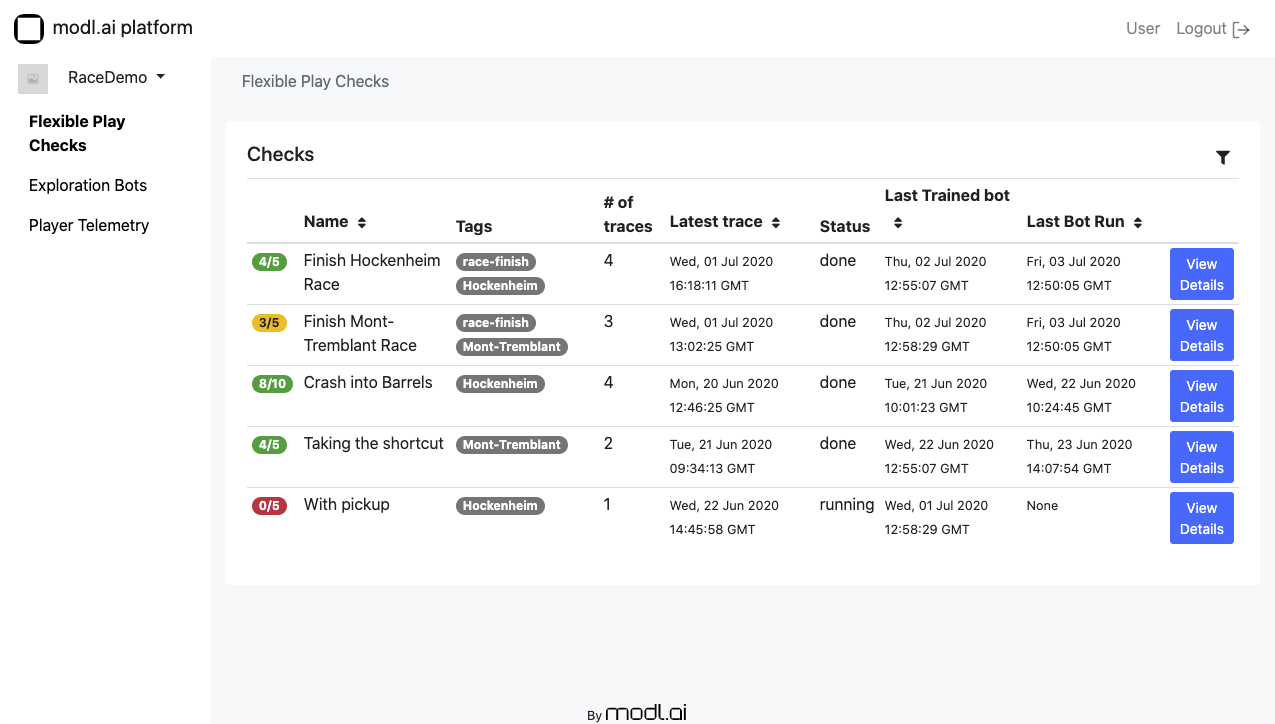

Here’s how automated playtesting works:

- Record a playthrough of the levels you wish to test.

- Train one or more bots to complete a part of your game.

- Adjust how the bot plays the if needed.

- The bot automatically plays the game after any committed changes.

- Developers and QA continuously receive reports on the bot\s performance.

You may ask, why not just record a playthrough and let the bot replay that? Why do we need to train a bot using AI at all? Well, in some cases, a recorded playthrough might complete the test, but if you have any randomness in your game, replaying a static play session won\t work. We almost always have random elements in the game logic, like in a racing game where collisions with other cars affect the gameplay.

That’s why we train the bot using imitation learning. That means the bot is flexible and adapts to randomness and minor changes in the level.

Exploratory Testing

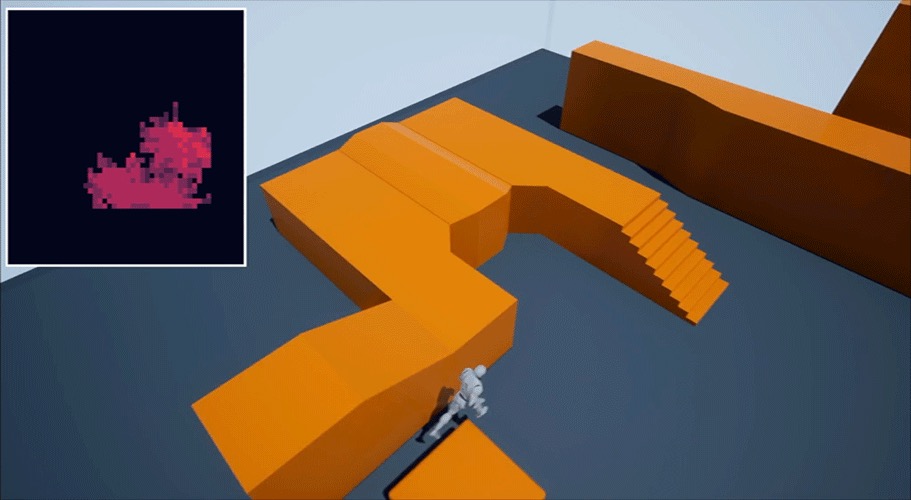

This is a whole other way of testing your game. An exploratory bot doesn’t need us to record a play session. In exploratory testing, the bot reports back once it fulfills a predefined objective. For instance, finding a hole in the geometry and falling out of the level.

Exploratory bots can find glitches in your game that could take days of manual testing to expose. With AI, we can even have thousands of bots seeking out all parts of your game simultaneously.

Here’s how exploratory testing works:

- We start the bot in a known and safe position in the level.

- One or more bots explore the level, e.g., by performing random movements.

- The bot stops when it fulfills a criterion, e.g., it falls out of the level.

- Developers and QA continuously receive reports on the bot’s performance.

The bot can provide a heat map of the areas it has explored. Multiple bots can play at the same time up to 20 times the speed of a human player for quick results — all reading from and adding to the same heatmap.

We don’t need to train a bot or use a neural network. All we need is a goal provided by the developers or QA, e.g., escape the level.

Escaping the Minus World

To use bots for game testing, we need to have a few things set up. The bot must be able to return to a safe and saved situation. That’s usually not an issue since saving, and loading is a common game feature. Should the game not have a save feature, we can store all actions required to reach the desired starting point and execute them before starting the test.

AI has a huge potential for automated game testing. It’s time we let ourselves out of the Minus World’s infinite loop and leave the repetitive QA tasks to the bots. Who knows, maybe your next tester will be an AI?