One of the biggest challenges faced when creating characters for video games is that you often have to replicate real-world ideas or concepts that a player may take for granted and then tweak them slightly so that it’s fun to play against. And there’s no better example than stealth games. Whether you’re playing Splinter Cell, Invisible Inc, or Dishonored: it’s all about ensuring players can determine the perfect opportunity to sneak into locations, do their business, and get out successfully. They might have to eliminate an enemy or steal an object, but you want to make as little commotion as possible. It’s a combination of preparation and planning, but also understanding how and when guards and other security systems will detect you as you move around the space.

This is a really difficult balancing act for a non-player character in a videogame because you have to make sure they have sensors that can help them see and hear what’s happening around them, and then dumb them down so the player can move around without being spotted instantly. Thief: The Dark Project, released back in 1998 set the standard for AI in pretty much every stealth game that followed it, as players sneak into heavily fortified structures, evade guards, and go about their business. To make this work for Thief, developers have to create reproductions of human senses in ways that are not very realistic.

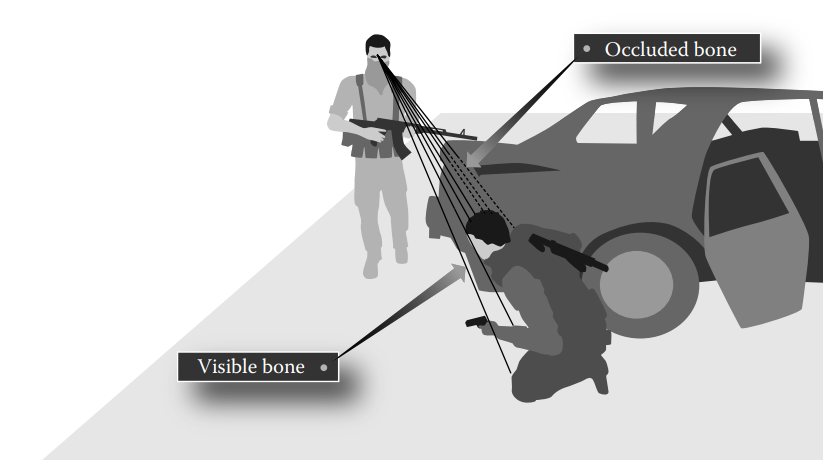

If we first consider the vision, a common tactic for reproducing this in games is a ‘vision cone’, which is a fixed range in front of the character’s head. If the player walks into that range and nothing blocks their view, then the character has ‘spotted’ you. This becomes all the more complicated when you start factoring things like smoke and lighting, given in the game engine they can still see you. So you have to pretend these things will impact their vision. More recent games, such as Splinter Cell or The Last of Us, don’t just use a cone, but also then count how many parts of the player’s body are visible to the character, which will influence how quickly a character can spot you.

The same challenges arise with sound, but it’s even harder to make that work right. Simulating a sound being made is really difficult to do because calculating the occlusion and reflections of sound waves is quite complicated. So often the trick is to find a way to simulate it as cheaply but accurately as possible. But even if the AI character heard it, you then have to worry about muddying the information. You don’t want a character to walk to the exact location in the world a sound happened, but rather somewhere close by.

Once you get these sensors working, you then have to figure out priorities. If the character sees one thing and then another, which one was more important? If they heard something else when all that happened, does that take priority? It can all get pretty hairy if you don’t establish priorities for different sensory readings and rules on how they should be handled.

But once it’s all in there, you then need to make sure that players understand how these senses work. Some games give a small delay between you first being detected and the character acting upon it: this might mean the rate of detection for the sensor, or an animation to make it look like the character is reacting to what their senses have spotted. Games like Far Cry show the detection meter increasing, while Splinter Cell will have characters investigate ‘disturbances’, where they pretend they didn’t really see you, and walk over to get a closer look. Some games even show you the vision cones: you could literally see them in the minimap of Metal Gear Solid, while Third Eye Crime has them appear in the world as characters move around.

But the one most games avoid is the smell. You can often communicate to a player whether an AI can see you, or if they heard you make a sound. But the smell is really difficult to get right. Designing olfactory senses for games is already a challenge, but then communicating to the player whether a character can smell the player, or even how smelly the player is, is something that most games avoid.

Be sure to stick around for more of our History of AI series here at modl.ai. In addition, if you’re keen to learn more about how stealth is implemented in video games. Be sure to check out the episode on AI and Games all about the ‘pillars of stealth’ in Splinter Cell.